Artificial intelligence is no longer a futuristic vision but a tangible part of financial reality. Financial institutions increasingly rely on AI technologies for tasks ranging from credit scoring to fraud detection. Yet, the crucial question remains: How willing are people to delegate financial decision-making to machines?

The bachelor thesis by Svetlana Pobivanz examines how AI autonomy and communicative interaction design influence trust in AI-based investment advice, which is essential for the adoption of digital banking and robo-advisory services.

Research Objective:

The study investigates the role of AI system characteristics—autonomy and interaction style—on user trust. While previous research (Lee & See, 2004; Siau & Wang, 2018) highlighted trust as central to technology acceptance, this study extends this by considering the psychological and design-related factors affecting trust in financial AI.

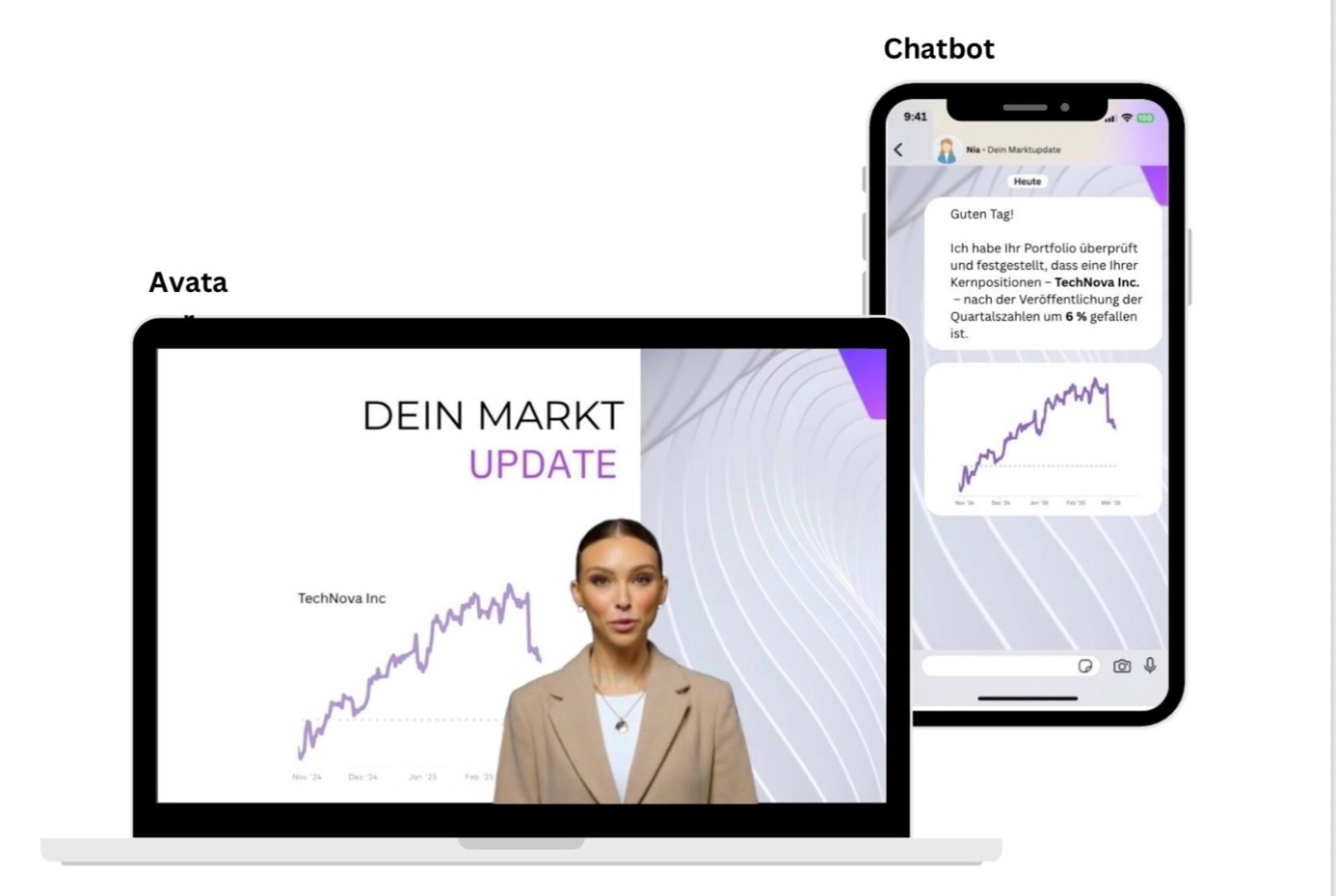

Specifically, the research explores whether a semi-autonomous AI with user choice or a fully autonomous AI, and whether a human-like avatar or a text-based chatbot, can enhance perceived trustworthiness.

Method:

A 2×2 experimental design was employed with 197 participants who were randomly assigned to one of the four experimental conditions. Participants interacted with a fictional AI portfolio assistant simulating investment recommendations.

- In the full-autonomy condition, the AI made decisions independently.

- In the semi-autonomy condition, the AI offered three options for the user to select.

- The interaction design varied between a human-like avatar capable of speech and facial expressions and a text-based chatbot.

Trust was assessed using the Trust in Automation Scale (Jian et al., 2000) and the Trust in Artificial Intelligence Scale (Hoffman et al., 2021), evaluating perceived reliability, competence, and transparency.

Key Findings:

Contrary to expectations, neither AI autonomy nor interaction design had a significant main effect on trust (p > .05). Descriptively, avatar-based advisors were perceived as slightly more personable, but this did not translate into significantly higher trust.

A regression analysis revealed that participants’ general attitudes toward AI were the strongest predictor of trust (b = .53; β = .45). Positive prior attitudes toward AI consistently led to higher trust, irrespective of system design. This aligns with the Technology Acceptance Model (Venkatesh & Davis, 2000), emphasizing trust as a key determinant of technology adoption.

Conclusion:

The results of the study show that trust in AI-supported investment advice depends less on interaction design or degree of autonomy. Neither avatars nor chatbots nor different levels of autonomy had a significant impact on trust. Although avatars are perceived as more likeable, this effect did not translate into greater trust.

Rather, the general attitude of users towards AI is decisive. It can be confirmed that trust is primarily created through transparency, traceability and comprehensible communication (Hoffman et al., 2023; Choung, David & Ross, 2023). User-friendly presentations can improve the experience, but they do not replace the need for clear explanations and education.

Overall, the results show that promoting knowledge, transparency and a positive attitude is key to building trust in AI-based investment advice and strengthening the acceptance of such systems in the long term.

References:

Choung, H., David, P., & Ross, A. (2023). Trust in AI and Its Role in the Acceptance of AI Technologies. International Journal of Human–Computer Interaction, 39(9), 1727–1739. https://doi.org/10.1080/10447318.2022.2050543

Hoffman, R. R., Mueller, S. T., Klein, G., & Litman, J. (2023). Measures for explainable AI: Explanation goodness, user satisfaction, mental models, curiosity, trust, and human-AI performance. Frontiers in Computer Science, 5, 1096257. https://doi.org/10.3389/fcomp.2023.1096257

Hoffman, R., Mueller, S. T., Klein, G., & Litman, J. (2021). Measuring Trust in the XAI Context. https://doi.org/10.31234/osf.io/e3kv9

Jian, J.-Y., Bisantz, A. M., & Drury, C. G. (2000). Foundations for an Empirically Determined Scale of Trust in Automated Systems. International Journal of Cognitive Ergonomics, 4(1), 53–71. https://doi.org/10.1207/S15327566IJCE0401_04

Lee, J. D., & See, K. A. (2004). Trust in Automation: Designing for Appropriate Reliance. Human Factors: The Journal of the Human Factors and Ergonomics Society, 46(1), 50–80. https://doi.org/10.1518/hfes.46.1.50_30392

Siau, K., & Wang, W. (2018). Building Trust in Artificial Intelligence, Machine Learning, and Robotics. 47https://www.researchgate.net/publication/324006061_Building_Trust_in_Artificial_Intelligence_Machine_Learning_and_Robotics

Venkatesh, V., & Davis, F. D. (2000). A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Management Science, 46(2), 186–204. https://doi.org/10.1287/mnsc.46.2.186.11926